|

System

1

|

System

2

|

|

Unconscious

reasoning

|

Conscious

reasoning

|

|

Judgments

based on intuition

|

Judgments

based on critical examination

|

|

Processes

information quickly

|

Processes

information slowly

|

|

Hypothetical

reasoning

|

Logical

reasoning

|

|

Large

capacity

|

Small

capacity

|

|

Prominent

in animals and humans

|

Prominent

only in humans

|

|

Unrelated

to working memory

|

Related

to working memory

|

|

Operates

effortlessly and automatically

|

Operates

with effort and control

|

|

Unintentional

thinking

|

Intentional

thinking

|

|

Influenced

by experiences, emotions, and memories

|

Influenced

by facts, logic, and evidence

|

|

Can

be overridden by System 2

|

Used

when System 1 fails to form a logical/acceptable conclusion

|

|

Prominent

since human origins

|

Developed

over time

|

|

Includes recognition, perception,

orientation, etc.

|

Includes

rule following, comparisons, weighing of options, etc.

|

Friday, June 19, 2015

Why Would an AI System Need Phenomenal Consciousness?

In my last post on Jesse Prinz, we learned about the

distinction between immediate, phenomenal awareness in consciousness in

contrast to our more deliberative consciousness that operates with the contents

of short term and longer term memory. From

moment to moment in our experience, there are mental contents in our

awareness. Not all of those contents

make it into the global workspace and become available to reflective,

deliberative thought, memory, or other cognitive functions. That is, there are contents in phenomenal

awareness that are experienced, and then they are just lost. They cease to be anything to you, or part of

the continuous narrative of experience that you reconstruct in later moments

because they never make it to the neural processes that would capture them and

make them available to you at later times.

We also know that these contents of phenomenal consciousness

are also most closely associated with the qualitative feels from our sensory

periphery. That is, phenomenal awareness

is filled with the smells, tastes, colors, feels, and sounds of our sensory

inputs. Phenomenal awareness is filled

with what some philosophers call qualia.

Let me add to this account and see what progress we can make

on the question of building a conscious AI system.

Daniel Kahneman and Amos Tversky got the Nobel Prize for their

work uncovering what they call Dual Process Theory in the human mind. We possess a set of quick, sloppy cognitive

functions called System 1, and a more careful, slower more deliberative set of

functions called System 2.

In short, System 1 makes gains in speed for what it

sacrifices in accuracy, and System 2 gives up speed for a reduction in

errors.

The evolutionary influences that led to this bifurcation are

fairly widely agreed upon. System 1 gets

us out of difficulties when action has to be taken immediately so we don’t get

crushed by a falling boulder, fall from the edge of a precipice, eaten by a

charging predator, or smacked in the head by a flying object. But when time and circumstance allows for

rational deliberation, we can think things through, make longer term plans,

strategize, problem solve, and so on.

An AI system, depending on its purpose, need not be

similarly constrained. An AI system may

not need to have both sets of functions.

And the medium of construction of an AI system may not require tradeoffs

to such an extent. Transmission time for

conduction across neural cells is about 150 meters per second. By the time the information about the

baseball that is flying at you gets through your optic nerve, through the V1

visual cortex, and up to the pre-frontal lobe for serious contemplation, the

ball has already hit you in the head. Transmission

time for silicon circuitry is effectively the speed of light. We may not have to give up accuracy for speed

to such an extent. Evolution favored

false positives over false negatives in the construction of many systems. It’s better to mistake a boulder for a bear,

as they say, than a bear for a boulder.

A better safe than sorry strategy is more favorable to your contribution

to the gene pool for the species in many cases.

We need not give up accuracy for speed with AI systems, and we need not construct

them to make the systematic errors we do.

The neural processes that are monitoring the multitude of

inputs from my sensory periphery are hidden from the view of my conscious

awareness. The motor neurons that fire,

the sodium ions that traverse the cell membranes, the neurotransmitters that

cross the synaptic gaps when I move my arm are not events that I can see, or

detect in any fashion as neural events.

I experience them as the sensation

of my arm moving. From my perspective,

moving my arm feels one way. But the

neural chemical events that are physically responsible are not available to me

as neural chemical events. A particular

amalgam of neural-chemical events from my perspective tastes like sweetness, or

hurts like a pin prick, or looks like magenta.

It would appear that evolution stumbled upon this sort of condensed, shorthand

monitoring system to make fast work of categorizing certain classes of

phenomenal experience for quick reference and response. If the physical system in humans is capable

of producing qualia that are experiencable from the subject’s point of view (It’s

important to note that whether qualia are even real things is a hotly debated

question

then presumably a physical AI system could be built that

generates them too. Not even the

fiercest epiphenomenalist, or modern property dualist denies mind/brain

dependence. But a legitimate question

is, do we want or need to build an AI system with them? What would be the purpose, aside from

intellectual curiosity, of building qualia into an AI system? If AI systems can be better designed than the

systems that evolution built, and if AI systems need not be constrained by the

tradeoffs, processing speed limitations, or other compromises that led to the

particular character of human consciousness, then why put them in there?

Monday, June 8, 2015

Artificial Intelligence and Conscious Attention--Jesse Prinz's AIR theory of Consciousness

Jesse Prinz has argued for that consciousness is best

understood as mid-level attention.

Consciousness, Prinz argues, is best understood as mid-level attention.

Low level representers in the brain are neurons that perform

simple discrimination tasks such as edge or color detection. They are activated early on in the process of

stimuli from the sensory periphery.

(a poorly taken, copyright violating picture from Michael Gazzaniga's Cognitive Neuroscience textbook.)

The activation of a horizontal edge detector, by itself, doesn’t

constitute organized awareness of the object, or even the edge.

Neuron complexes in human brains are also capable of very

high level, abstract representation. In

a famous study, “Invariant visual representation by single neurons in the humanbrain,” Quiroga, Reddy, Kreiman, Kock, and Fried, they discovered the so-called

Halle Berry neuron with some sensitive detectors inserted into different

regions of the brains of some test subjects.

This neuron’s activity was correlated with activation patterns for a

wide range of Halle Berry images.

What’s really interesting here is that this neuron became

active with quite varied photos and line drawings of Halle Berry, from

different angles, in different lighting, in a Cat Woman costume, and even,

remarkably, in response to the text “Halle Berry.” That

is, this neuron plays a role in the firing patterns for a highly abstract

concept of Halle Berry.

Prinz is interested in consciousness conceived as mid-level

representational attention that lies somewhere between these two extremes. “Consciousness is intermediate level

representation. Consciousness represents

whole objects, rich with surface details, located in depth, and presented from

a particular point of view.” During the

real time moments of phenomenal awareness, various representations come to take

up our attention in the visual field. Prinz

argues that, “Consciousness arises when we attend, and attention makes

information available to working memory. Consciousness does not depend on

storage in working memory, and, indeed, the states we are conscious of cannot

be adequately stored.”

When you look at a Necker cure, you can first be aware of

the lower left square as the leading face.

Then you can switch your

awareness to seeing the upper right square as the leading face. So you attention has shifted from one

representation to another.

That is the level at which Prinz is located the mercurial

notion of consciousness, and trying to develop a predictive theory based on the

empirical evidence. And Prinz goes to

some lengths to argue that consciousness in this sense is not what’s moved into

working memory, it’s not the contents necessarily that have become available to

the global workspace such as when they are stored for later access. These contents may or may not be accessible

later for recall. But at the moment they

are the contents of mind, part of the flow and movement of attention.

Here I’m not interested in the question of whether Prinz

provides us with the best theory of human consciousness, but I am interested in

what light his view can shed on the AI project.

I’m particularly interesting in Prinz here because it’s arguable that we

already have artificial systems that are capable, more or less, of doing the

low level and the high level representations described above. Edge detection, color detection, simple

feature detection in a “visual” field are relatively simple tasks for

machines. And processing at a high level

of conceptual abstraction has been accomplished in some cases. IBM’s Jeopardy playing system Watson

successfully answered clues such as, “To push one of these paper products is to

stretch established limits,” answer:

envelope. “Tickets aren’t needed

for this “event,” a black hole’s boundary from which matter can’t escape,”

answer: event horizon. “A thief, or the bent part of an arm,”

answer: crook. Even Google search algorithms do a remarkable

job of divining the intentions behind our searches, excluding thousands of

possible interpretations of our search strings that would be accurate to the

letters, but have nothing to do with what we are interested in.

So think about this. Simple

feature detection isn’t a problem. And we

are on our way to some different kinds of high level conceptual abstraction. Long term storage for further analysis also

isn’t a problem for machines. That’s one

of the things that machines already do better than us. But what Prinz has put his finger on is the

ephemeral movement of attention from moment to moment in awareness. During the course of writing this piece, I’ve

been multi-tasking, which I shouldn’t have.

I’ve been answering emails, sorting out calendar scheduling, making

plans to get kids from school, and so on.

And now I’m trying to recall what all I’ve been thinking about over the

last hour. Lots of it is available to me

to now. But there were, no doubt, a lot

of mental contents, a lot of random thoughts, that came and went without leaving much of a trace. I say, “no doubt,” because if they didn’t

go into memory, if they didn’t become targets of substantial focus, then even

though I had them then I won’t be able to bring them back now. And I say, “no doubt,” because when I am

attending to my conscious experience now, from moment to moment, and I’m really

concentrating on just this point, I realize that I’m aware of the feeling of

the clicking keyboard keys under my fingers, then I notice the music I’ve got

playing in the background, then I glance at my email tab, and so on. That is, my moments are filled with

miscellaneous contents. I’ve mode those

particular ones into a bigger deal in my brain because I just wrote about them

in a blog post. But lots of our

conscious lives, maybe most, those

contents come and go, like hummingbirds flitting in and out of the scene. And once they are gone, they are gone.

Now we can ask the questions: Do we want an AI to have that? Do we need an AI to have that? Would it serve any purpose?

Bottom Up Attention

That capacity in us served an evolutionary purpose. At any given time, there are countless zombie

agents, low level neuronal complexes, that are doing discriminatory work on

information from the sensory periphery and from other neural structures. The outputs of those discriminators may or

may not end up being the subject of conscious attention. In many cases, those contents become the

focus of attention from the bottom up.

So lower level system deems the content important enough to call your

attention to it, as it were. So when

your car doesn’t sound right when it’s starting up, or when a friend’s face

reveals that he’s emotionally troubled it jumps to our attention. Your brain is adept at scanning your

environment for causes for alarm and then thrusting them into the spotlight of

attention for action.

Top Down Attention

But we are able to

direct the spotlight as well. We can

focus our attention, sustain mental awareness on a task or some phenomena, to

suss out details, make extended plans, anticipate problems, and model out

possible future scenarios and so on. You can go to work finding Waldo:

gives a more detailed account of the evolutionary functions

of consciousness.

Given what we saw above about the difference in Prinz

between conscious attention and short and long term memory, we can see conscious

attention can be seen as a sort of screening process. A lot of ordinary phenomenal consciousness is

the result of low level monitoring systems crossing a minimal threshold of

concern. This, right here is important enough to take a closer look at.

Part of the reason that the window of our conscious

attention is temporally brief and spatially finite is that resources are

limited. Resources were limited when

evolution was building the system. It’s

kludged up from parts and systems that we re-adapted from other functions. There was no long view, or deliberate

planning on the process. Just the slow

pruning of mutation branches on the evolutionary tree. And it modifies the gene pool according to

the rates at which organisms, equipped as they are, manage to meet survival

challenges.

Kludge: Consider to

different ways to work on a car. You

could take it apart, analyze the systems, plan, make modifications, build new

parts, and then reassemble the car. While

the car is taken apart and while you are building new parts, it doesn’t

function. It’s just a pile of parts on

the shop floor.

But imagine that the car is in a race, and there’s a bin of

simple replacement parts on board, some only slightly different than the ones

currently in the car, and modifications to the car must be made while the car

is racing around the track with the other cars.

The car has to keep going at all times, or it’s out of the race for

good. Furthermore, no one gets to choose

which parts get pulled out of the bin and put into the car. That’s a kludge.

Resources are also limited because evolution built a system

that does triage. The cognitive systems

just have to be good enough to keep the organism alive long enough to bear its

young, and possibly make a positive contribution toward their survival. The monitoring systems that are keeping track

of its environment just need to catch the deadly threats, and catch them only

far enough in advance to save its ass.

It’s not allowed the luxury of long term, substantial contemplation of

one topic or many to the exclusion of all others. Furthermore, calories are limited. Only so many can be scrounged up during the

course of the day. So only so many can

be dedicated to the relatively costly expenditure of billions of active neural

cells.

The evolutionary functions of consciousness for us give us

some insight into whether it might be useful or dangerous in an AI. First, AIs can be better planned, better

designed than evolution’s brains. An AI

need not be confined to triage functions, although we can imagine modeling

human brains to some extent and using them to keep watch on bigger, more

complex systems where more can go wrong than human operators could keep track

of. An AI might run an airport better,

or a subway system, or a power grid, where hundreds or thousands or more

subsystems need to be monitored for problems.

The success of self-driving Google cars already suggest what could be

possible with wide spread implementation on the street and highway

systems. So bottom up indicated

monitoring could clearly be useful in an AI system.

Top down, executive directed control of the spotlight of

attention, and the deliberate investment of processing resources into a

representational complex with longer term planning and goal directed activity

driving the attention could clearly be useful for an AI system too. “Hal, we want you to find a cure for

cancer. Here are several hundred

thousand journal articles.”

The looming question, of course, is what about

the dangers of building mid-level attention into an AI? Bostrom’s Superintelligence has been looming

in the back of my mind through this whole post.

It’s a big topic. I’ll save that

for a future post, or 3 or 10 or 25.

Friday, June 5, 2015

Evil Demonology and Artificial Intelligence

Eliminativists eliminate.

In history, the concepts and theories that we build about the world form

a scaffold for our inquiries. As the

investigation into some phenomena proceeds, we often find that the terms, the

concepts, the equations, or even whole theories have gotten far enough out of

synch with our observations to require consignment to the dustbin of

history. Demonology was once an active

field of inquiry in our attempts to understand disease. The humour theory of disease was another

attempt to understand what was happening to Plague victims in the 14th

century. Medieval healers were trying to

explain a bacterial infection with yersenia pestis 600 years before the

microbe, the real cause, had even been identified. Explanations of the disease symptoms in terms

of imbalances of yellow bile, blood, black bile, and phlegm produced worthless

and ineffective treatments. So we

eliminate humour theory of disease, demonology, the elan vital theory of life, God, Creationism, and so on as science

marches on.

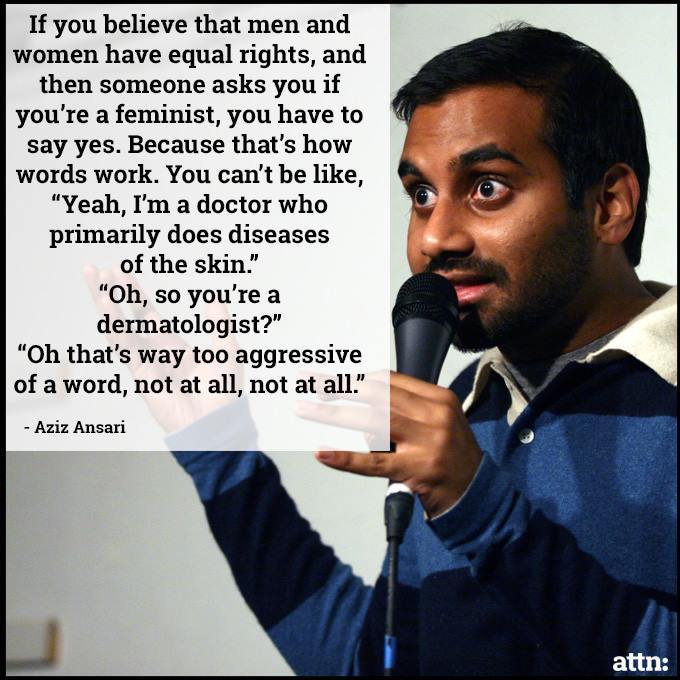

Eliminativism has taken on the status of a dirty word among

some philosophers, a bit like people who are quick to insist that they believe

women are equal and all that, but they aren’t “feminists” because that’s too

harsh or strident.

But we can and should take an important lesson from EM, even

if we don’t want to be card carrying members.

Theory changes can be ontologically conservative or ontologically

radical depending on the extent to which they preserve the entities, concepts,

or theoretical structures of the old account.

That is, we can be conservative; we can hold onto the old

terms, the old framework, the old theory, and revise the details in light of

the new things we learn.

Here’s how the Churchlands explains the process.

We begin our inquiry into what appears to be several related

phenomena, calling it “fire.”

Ultimately, when a robust scientific theory about the nature of the

phenomena is in place, we learn that some of the things, like fireflies and

comets, that we originally thought were related to burning wood, are actually

fundamentally different. And we learn

that “fire” itself is not at all what we originally thought it was. We have to start with some sort of conceptual

scaffolding, but we rebuild it along the way, jettison some parts, and

radically overhaul parts of it.

My point then, is that we must take a vital lesson from the

eliminativists about the AI project. At

the outset of our inquiry, it seems like terms such as “thinking,”

“consciousness,” “self-awareness,” “thoughts,” “belief,” and so on identify

real phenomena in the world. These terms

seem to break nature at the joints, as they say. But we should be prepared, we should be eager

even, to scrap the term, overhaul the definition, toss the theory, or otherwise

regroup in the light of important new information. We are rapidly moving into the golden age of

brain science, cognitive science, and artificial intelligence research. We should expect that to produce upheaval in

the story we’ve been telling for the last several hundred years of thinking

about thinking. Let’s get ahead of the

curve on that.

With that in mind, I’ll use these terms in what follows with

a great big asterisk: * this is a sloppy term that is poorly defined

and quite possibly misleading, but we’ve gotta start somewhere.

Folk psychological terms that I’m prepared to kick to the

curb: belief, idea, concept, mind,

consciousness, thought, will, desire, freedom, and so on. That is, as we go about theorizing about and trying

to build an AI, and someone raises a concern of the form, “But what about

X? Can it do X? Oh, robots will never be able to do X….” I am going to treat it as an open question

whether X is even a real thing that needs to be taken into account.

Imagine we time traveled a medieval healer from 14th

century France to the Harvard school of medicine. We show him around, we show him all the

modern fancy tools we have for curing disease, we show him all the different

departments where we address different kinds of disease, and we show him lots

of cured patients. He’s suitably

impressed and takes it all in. But then

he says, “This is all very impressive and I am amazed by the sights and things

going on here. But you call yourselves

healers? What you are doing here is interesting, but where are your demonologists?

In 700 years, have you not made any progress at all addressing the real

source of human suffering which is demon possession? Where is your department of demonology? Those are the modern experts who I’d really like

to talk to.”

We don’t want to end

up being that guy.

We should expect, given the lessons of history, that some of

the folk psychological terms that we’ve been using are going to turn out to not

identify anything real, some of them will turn out to not be what we thought

they’d be at all, and we’re going to end up filling in the details about minds

in ways that we didn’t imagine at the outset.

Let’s not be curmudgeonly theorists, digging in our heels and refusing

to innovate our conceptual structures. But

on the other hand, let’s also not be too ready to jump onto to every new

theoretical bandwagon that comes along.

Wednesday, June 3, 2015

Turing and Machine Minds

In 1950, mathematician Alan M. Turing proposed a test for

machine consciousness. If a human

interrogator could not distinguish between the responses of a real human being

and a machine built to hold conversations, then we would have no reason, other

than prejudice, for not admitting that the machine was in fact conscious and

thinking. http://orium.pw/paper/turingai.pdf

I won’t debate the merits or sufficiency of the Turing Test

here. But I will use it to introduce

some clarifications into the AI discussion.

Turing thought that if a machine could do some of the things we do, like

have conversations, that would be an adequate indicator of the presence of a

mind. But we need to get clear on the

goal in building an artificial intelligence.

Human minds are what we have to work with as a model, but not everything

about them is worth replicating or modeling.

For example, we are highly prone to confirmation bias, we have loss

aversion, and we can only hold about 7-10 digits (a phone number) in short term

working memory. Being able to

participate in a conversation would be an impressive feat, give the subtleties

and vagaries of natural language. But it’s

a rather organic, idiosyncratic, and anthropocentric task. And we might invest substantial effort and

resources into replicating contingent, philosophically pointless attributes of

the human mind instead of fully exploring some of the possibilities of a new,

artificial mind of a different sort. Japanese

researchers, for example, have invested enormous amounts of money and effort

into replicating subtle human facial expressions on robots. Interesting for parties maybe, but we

shouldn’t get lost up side tributaries as we move up the river to the source of

mind.

One of the standard objections to Turing’s thesis is

this: But a Turing machine/Artificial

intelligence system can’t/doesn’t have _______________, where we insert one of

the following:

a. make mistakes. (Trivial to build in, but inessential and

unimportant.)

b. have emotions (Inessential,

and philosophically and practically uninteresting.)

c. fall in love

(Yawn.)

d. care/want (Maybe

this is important. Perhaps having goals

is essential/interesting. It remains to

be seen if this cannot be built into such a system. More on goals later.)

e. freedom (Depends

on what you mean by freedom. Short

answer: there don’t appear to be any

substantial reasons a priori why an artificial system cannot be built that has “freedom”

in the sense that’s meaningful and interesting in humans. See Hume on freewill. http://www.iep.utm.edu/freewill/

f. produce original ideas.

( What does original mean? A new

synthesis of old concepts, contents, forms, styles? That’s easy.

Watson, IBM’s jeopardy dominating system is being used to make new

recipes, and lots of innovate, original solutions to problems.)

g. creativity (What

does this mean? produce original new

ideas? See above. Complex systems, such as Watson, have

emergent properties. They are able to

lots of new things that their creators/programmers did not foresee.)

h. do anything that it’s not programmed to do. (“Programmed” is outdated talk here. More later on connectionist systems. Can sophisticated AI programs do

unpredictable things now? Yes. Can they now do things that the designers

didn’t anticipate? Yes. Will they do more in the future as the

technology advances? Yes.)

i. feel pleasure or pain

(I’ll concede, for the moment, that building an artificial system that

has this capacity is a ways off technologically. And I’ll concede that it’s a very interesting

philosophical question. I won’t concede

that building this capacity in is impossible in principle. And we must also ask why is it important? Why do we need an AI to have this capacity?)

j. intelligence

k. consciousness

l. understand

m. qualitative or phenomenal states (See Tononi, Koch, and McDermott)

I think objections a-h miss the point entirely. I take it that for a-h, the denial that a

system can be built with the attribute is either simply false, will be proven

false, or the attribute isn’t interesting or important enough to warrant the

attention. i through m, however, are

interesting. And there’s a lot more to

be said about them. For each, we will

need more than a simple denial without argument. We need an argument with substantial

principled, non-prejudicial reasons for thinking that these capacities are beyond

the reach of technology. (In general,

history should have taught us to be very skeptical of grumbling naysaying the

form of “This new-fangled technology will never be able to X.” But one of the things I’m going to be doing

in the blog in the future is caching out in much more detail what the terms

intelligence, consciousness, understand, and phenomenal states should be taken

to mean in the AI project context, and working out the details of what we might

be able to build.

But more importantly, I think the list of typical objections

to Turing’s thesis raises this question:

just what do we want one of these things to do? Maybe someone wants to simulate a human mind

to a high degree of precision. I can

imagine a number of interesting reasons to do that. Maybe we want to model up the human neural

system to understand how it works. Maybe

we want to ultimately be able to replicate or even transfer a human

consciousness into a medium that doesn’t have such a short expiration

date. Maybe we want to build helper

robots that are very much like us and that understand us well. Maybe a very close approximation of a human

mind, with some suitable tweaks, could serve as a good, tireless, optimally

effective therapist. (See the early AI

experiments with a therapy program.)

But the human brain is a kludge. It’s a messy, organic amalgam of a lot of

different models and functions that evolved under one set of circumstances that

later got repurposed for doing other things.

The path that led from point A to point B, where B is the set of

cognitive capacities we have is convoluted, circuitous, full of fits and

starts, peppered with false starts, tradeoffs, unintended consequences, byproducts,

and the like.

A partial list of endemic

cognitive fuckups in humans from Kahneman and Tversky (and me): Confirmation Bias, Sunk Cost Fallacy, Asch

Effect, Availability Heuristic, Motivated Reasoning, Hyperactive Agency

Detection, Supernaturalism, Promiscuous Teleology, Faulty Causal Theorizing, Representativeness

Heuristic, Planning Fallacy, Loss Aversion, Ignoring Base Rates, Magical

Thinking, and Anchoring Effect.

So with all of that said, again, what do we want an AI to

do? I don’t want one to make any of the

mistakes on the list just above. And I think

that we shouldn’t even be talking about mistakes, emotions, falling in love,

caring or wanting, freedom, or feeling pleasure of pain. What these things show incredible promise at

doing is understanding complex, challenging problems and then devising

remarkable and valuable solutions to them. Watson, the Jeopardy dominating system built

by IBM, has been put to use devising new recipes. Chef Watson is able to interact with would be

chefs, compile a list of preferred flavors, textures, or ingredients, and then

create new recipes, some of which are creative, surprising, and quite

good. The tamarind-cabbage slaw with

crispy onions is quite good, I hear. But

within this seemingly frivolous application of some extremely sophisticated

technology, there is a more important suggestion. Imagine that Watson’s ingenuity is put to

work in a genetics lab, in a cancer research center, in an engineering firm

building a new bridge, or at the National Oceanic and Atmospheric

Administration predicting the formation and movement of hurricanes. I submit that building a system that can

grasp our biggest problems, fold in all of the essential variables, and create

solutions is the most important goal we should have. And we should be injecting huge amounts of

our resources into that pursuit.

Tuesday, June 2, 2015

Building Self-Aware Machines

The public mood toward the prospect of artificial

intelligence is dark. Increasingly,

people fear the results of creating an intelligence whose abilities will far

exceed our own, and who pursues goals that are not compatible with our

own. See Nick Bostrom’s Superintelligence: Paths, Dangers, Strategies for a good

summary of those arguments. I think resistance

is a mistake (and futile) and I think we should be actively striving toward the

construction of artificial intelligence.

When we ask “Can a machine be conscious?,” I believe we often

misses several important distinctions. With

regard to the AI project, we would be better off distinguishing at least

between qualitative/phenomenal states, exterior self-modeling, interior

self-modeling, information processing, attention, sentience, executive top-down

control, self-awareness, and so on. Once

we make a number of these distinctions, it becomes clear that we have already

created systems with some of these capacities, others are not far off, and

still others present the biggest challenges to the project. Here I will focus

just on two, following Drew McDermott:

interior and exterior self-modeling.

A cognitive system has a self-model if it has the capacity

to represent, acknowledge, or take account of itself as an object in the world

with other objects. Exterior

self-modeling requires treating the self solely as a physical, spatial-temporal

object among other objects. So you can

easily spatially locate yourself in the room, you have a representation of

where you are in relation to your mother’s house, or perhaps to the Eiffel

Tower. You can also easily temporally

locate yourself. You represent Napoleon

as am 18th century French Emperor, and you are aware that the

segment of time that you occupy is after the segment of time that he

occupied. Children swinging from one bar

to another on the playground are employing an exterior self-model, as is a

ground squirrel running back to its burrow.

Exterior self-modeling is relatively easy to build into an

artificial system compared to many other tasks that face the AI project. Your phone is technologically advanced enough

to put itself in a location in space in relationship to other objects with its

GPS system. I built a CNC machine in my

garage (Computer Numeric Controlled cutting system) that I ”zero” out when I

start it up. I designate a location in a

three dimensional coordinate system as (0, 0, 0) for the X, Y, and Z axes, then

the machine keeps track of where it is in relation to that point as it cuts. When it’s finished, it returns to (0, 0,

0). The system knows where it is in

space, at least in the very small segment of space that it is capable of

representing (About 36” x 24” x 5”).

Interior self-modeling is the capacity to represent yourself

as an information processing, epistemic, representational agent. That is, a system has an interior self-model

if it represents the state of its own informational, cognitive capacities. Loosely, it is knowing what you know and

knowing what you don’t know. It is a

system that is able to locate the state of its own information about the world

within a range of possible states. When

you recognize that watching too much Fox News might be contributing to your being

negative about President Obama, you are employing an interior self-model. When you resolve to not make a decision about

which car to buy until you’ve done some more research, or when you wait until

after the debates to decide which candidate to vote for, you are exercising

your interior self-model. You have

located yourself as a thinking, believing, judging agent within a range of

possible information states. Making

decisions requires information. Making

good decisions requires being able to assess how much information you have, how

good it is, and how much more (or less) you need or how much better you need it

to be in order to decide within the tolerances of your margins of error.

So in order to endow an artificial cognitive system with an

interior self-model, we must build it to model itself as an information system

similar to how we’d build it to model itself in space and time. Hypothetically, a system can have no

information, or it can have all of the information. And the information it has can be poor

quality, with a high likelihood of being false, or it can be high quality, with

a high likelihood of being true. Those

two dimensions are like a spatial-temporal framework, and the system must be

able to locate its own information state within that range of

possibilities. Then the system, if we

want it to make good decisions, must be able to recognize the difference

between the state it is in and the minimally acceptable information state it

should be in. Then, ideally, we’d build

it with the tools to close that gap.

Imagine a doctor who is presented with a patient with an unfamiliar set

of symptoms. Recognizing that she

doesn’t have enough information to diagnosis the problem, she does a literature

search so that she can responsibly address it.

Now imagine an artificial system with reliable decisions heuristics that

recognizes the adequacy or inadequacy of its information base, and then does a

medical literature review that is far more comprehensive, consistent, and

discerning than a human doctor is capable of.

At the first level, our AI system needs to be able to compile and

process information that will produce a decision. But at the second level, our AI system must

be able to judge its own fitness for making that decision and rectify the

information state short coming if there is one.

Representing itself as an epistemic agent in this fashion strikes me as

one of the most important and interesting ways to flesh out the notion of being

“self-aware” that is often brought up when we ask the question “Can a machine

be conscious?”

McDermott, Drew.

“Artificial Intelligence and Consciousness,” The

Cambridge Handbook of Consciousness, 117-150. Zelazo, Moscovitch, and Thompson, eds. 2007.

Also here: http://www.cs.yale.edu/homes/dvm/papers/conscioushb.pdf

Subscribe to:

Posts (Atom)